Algorithm complexity and time space trade off

An algorithm is a clear list of stages for the issue solution. Each step of an algorithm has a clear significance and executed with little effort and time. Each algorithm must meet the conditions below,

Inputs: Zero or more amounts that are provided to the algorithm externally.

Output: At least one output amount should produced.

Definition: Clear and unequivocal, each step.

Finiteness: all steps have terminated after a certain period for all situations of an algorithm.

Efficacy: The algorithm is going to be powerful.

In terms of time and space, the effectiveness of an algorithm has measured. The difficulty of an algorithm has described in terms of the input size, which allows time and space for running.

Complexity

Assume that M is an algorithm, and that n is the input data size. In terms of the time and space consumed in the algorithm, the effectiveness of M has measured. Time has measured by measuring the number of operations. The maximum memory utilized by M has calculated.

The complexity of M is the f(n) function that offers n runtime or room. In the theory of complexity, complexity has shown in the following three main situations,

Worst case: f(n) for all conceivable inputs has the greatest value.

Average case: the anticipated value is f(n).

Best case: f(n) has the lowest value feasible.

The example of the linear search algorithm illustrates these principles.

Suppose that a LIST linear array with n items is present and we must determine where specific information ITEM should be located. The search method compares ITEM to each element of the table to discover an INDEX position where LIST[INDEX]=ITEM is located. In addition, if the ITEM has not listed, it has to deliver a message like INDEX=-1.

A number of comparisons between ITEM and LIST[K] reveal the complexity of the search method.

Clearly, if ITEM is not in the LIST or the last LIST element, the worst scenario happens. We have n comparisons in both situations. This is the worst case of the linear algorithm of the search is C(n)=n.

The likelihood of ITEM occurring in any location is the same as that of ITEM. There are hence 1,2,3…n and each number has a p=1/n probability. Then

That is the average number of comparisons necessary to locate ITEM is around half the number of components.

Complexity Analysis

Algorithms are an important element of data structures. Using algorithms, data structures have implemented. A C-function, program, or any other language is a method you may write with the algorithm. An algorithm expressly describes how the data have handled.

Space-time tradeoff

Sometimes a space-time deal has involved in choosing a data structure. This allows us to minimize the time required for data processing by increasing the amount of space for storing the data.

Algorithm Efficiency

Some algorithms work better than others. In order to have measures to compare an algorithm’s efficiency, it would be great to choose an efficient algorithm.

The algorithm’s complexity has a function defining the algorithm’s efficiency in terms of the data to be processed. Normally the domain and range of this function have natural units. The effectiveness of an algorithm consists of two basic complexity measures:

Time complexity Algorithm

The Time complexity is a function that describes the time an algorithm takes with regard to the amount of the algorithm’s input. “Time” might represent the number of storage accesses, the number of integers to be compared, the number of times an inner loop or another natural unit takes the method in real-time.

We attempt to maintain this concept of time separate from ‘wall clock’ time because numerous unrelated things might influence actual time (like the language used, type of computing hardware, proficiency of the programmer, optimization in the compiler, etc.). If we have chosen units properly, it turns out that all the other things don’t matter and we can assess the efficiency of the algorithm independently.

Spatial complexity Algorithm

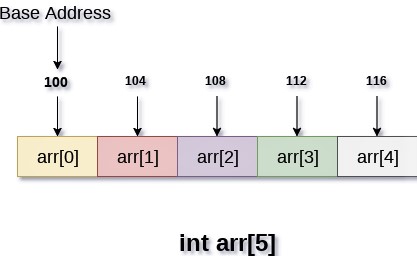

Spatial complexity is the function that describes how much (space) the algorithm uses to store the algorithm. We commonly talk about “additional” storage required, without including the storage required for the input. Again, in order to quantify this, we utilize natural (but fixed-length) units.

It is easier to utilize bytes, such as numbers of integers, number of fixed structures, etc. Finally, the function we develop is independent of the actual amount of bytes required to display the unit. Sometimes the spatial complexity is disregarded since the space utilized is small and/or clear, yet it is sometimes just as essential as time.

We might state “this method takes n2 time,” for example, where n is the number of items in the input. Or we might say “this method requires constant extra space” because there is no difference in the amount of additional storage needed in the processed objects.

The asymptotic complexity of the method is of importance for both space and time: When n (the number of input items) goes to endlessness, what happens to the fractal performance?